The Observability Problem Isn't Data Volume Anymore—It's Context

For years, the observability industry has been obsessed with one thing: data volume. We've built incredible pipelines, optimized agents, and scaled storage to handle petabytes of logs, metrics, and traces. The promise was simple: collect more data, get more visibility.

But we've hit a wall.

The modern log deluge is a reality for every engineering team. We're drowning in telemetry data but still struggling to find the signal in the noise. Root cause analysis (RCA) remains a slow, manual, and often painful process of sifting through millions of log lines, jumping between siloed dashboards, and trying to piece together a coherent story. The problem isn’t a lack of data; it's a lack of context. The data is there, but it's disconnected, disjointed, and not readily available to the tools that need it most.

This is why traditional dashboards, as powerful as they are, are no longer enough. They show you what's happening, but they don't help you understand why or what to do next.

The Rise of Agentic AI and The Context Problem

The conversation has already shifted. We’re moving from static dashboards to dynamic, "agentic" AI workflows. These are tools that don't just display data; they proactively act on it. Imagine an AI that can summarize a complex incident, recommend a fix, or even take action to resolve it. This is the promise of agentic AI.

But this promise hinges on a critical piece of the puzzle: context. A large language model (LLM) is only as good as the information it's given. Without the right data, at the right time, in the right format, an AI is just a gimmick. Its power is limited by its tiny context window and its inability to connect to the rich, real-time data streaming through your system.

This is the "context is the problem" moment. We realized that to truly operationalize AI in observability, we needed a new kind of infrastructure—one that acts as the intelligent bridge between the raw telemetry data and the powerful AI tools that need it.

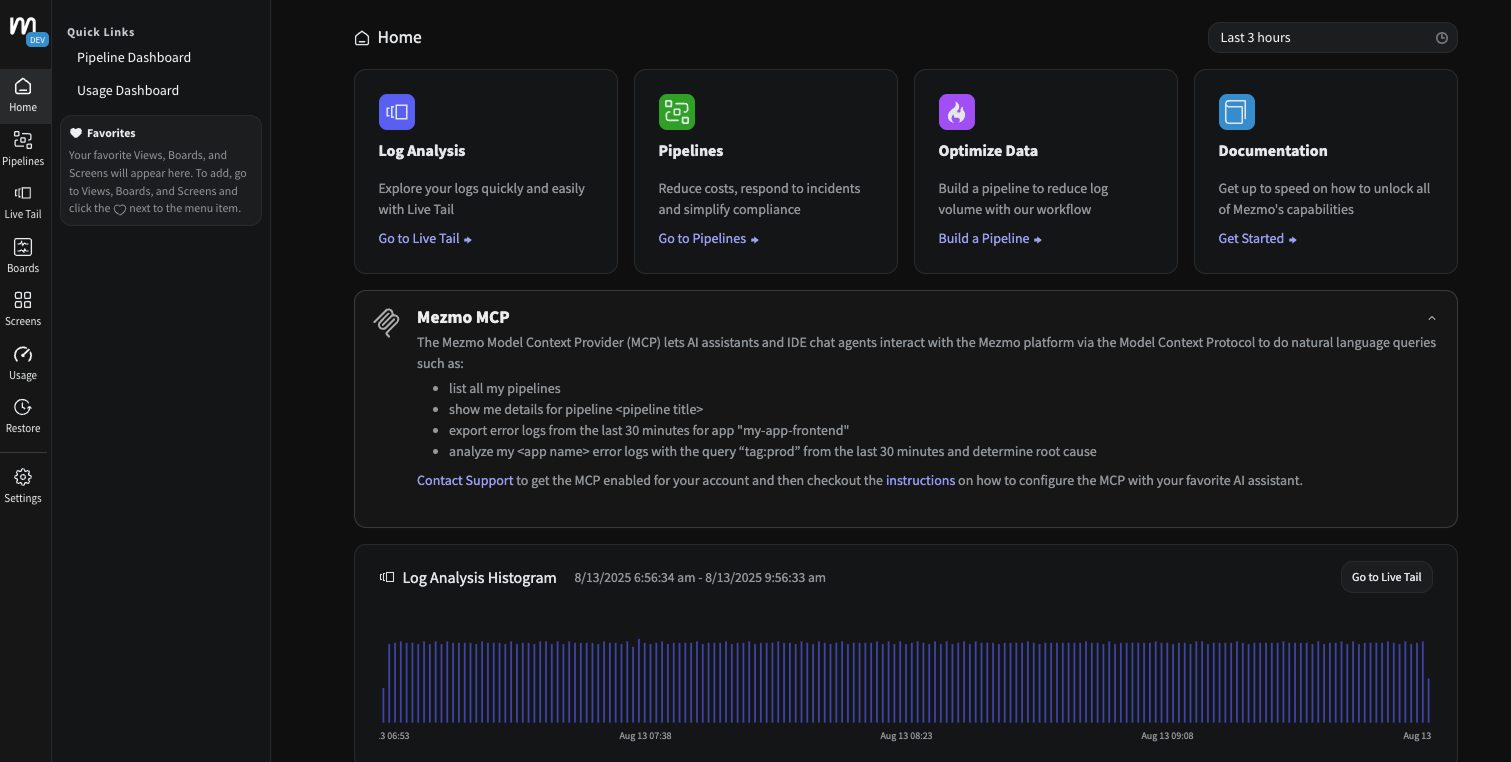

Introducing the Mezmo Model Context Protocol (MCP) Server

This is why we built the Mezmo Model Context Protocol (MCP) Server.

The MCP Server is an infrastructure layer designed to be the control plane for AI observability. It's a protocol and a service that makes AI tooling consistent, coordinated, and extensible. It does this by handling the heavy lifting of gathering and routing relevant context.

Think of it as the ultimate data orchestrator for your AI. Instead of every tool needing to independently fetch and process data, they can all connect to a single, consistent source of truth. The MCP Server takes your request—whether it's a natural language query from your IDE or an automated trigger from a CI/CD pipeline—and intelligently gathers the relevant context, like specific log lines from a time range or associated metadata, and condenses it for a given LLM.

This is where Mezmo’s core expertise in handling data in motion is a huge advantage. Our DNA is in processing, filtering, and enriching massive volumes of telemetry data in real time. This expertise is the perfect foundation for the MCP Server, allowing it to condense millions of log lines into a manageable, structured payload that fits within an LLM's limited context window. We turn a firehose of data into a single, cohesive narrative that an AI can understand and act upon.

The future of observability is not about more data; it's about smarter context. The Mezmo MCP Server is the foundation we’re building to make that future a reality.

Get Started with a Free Trial of Mezmo and check out our MCP server.

.png)

.jpg)

.png)

.png)